Local Anatomically – Constrained Facial Performance Retargeting

Abstract

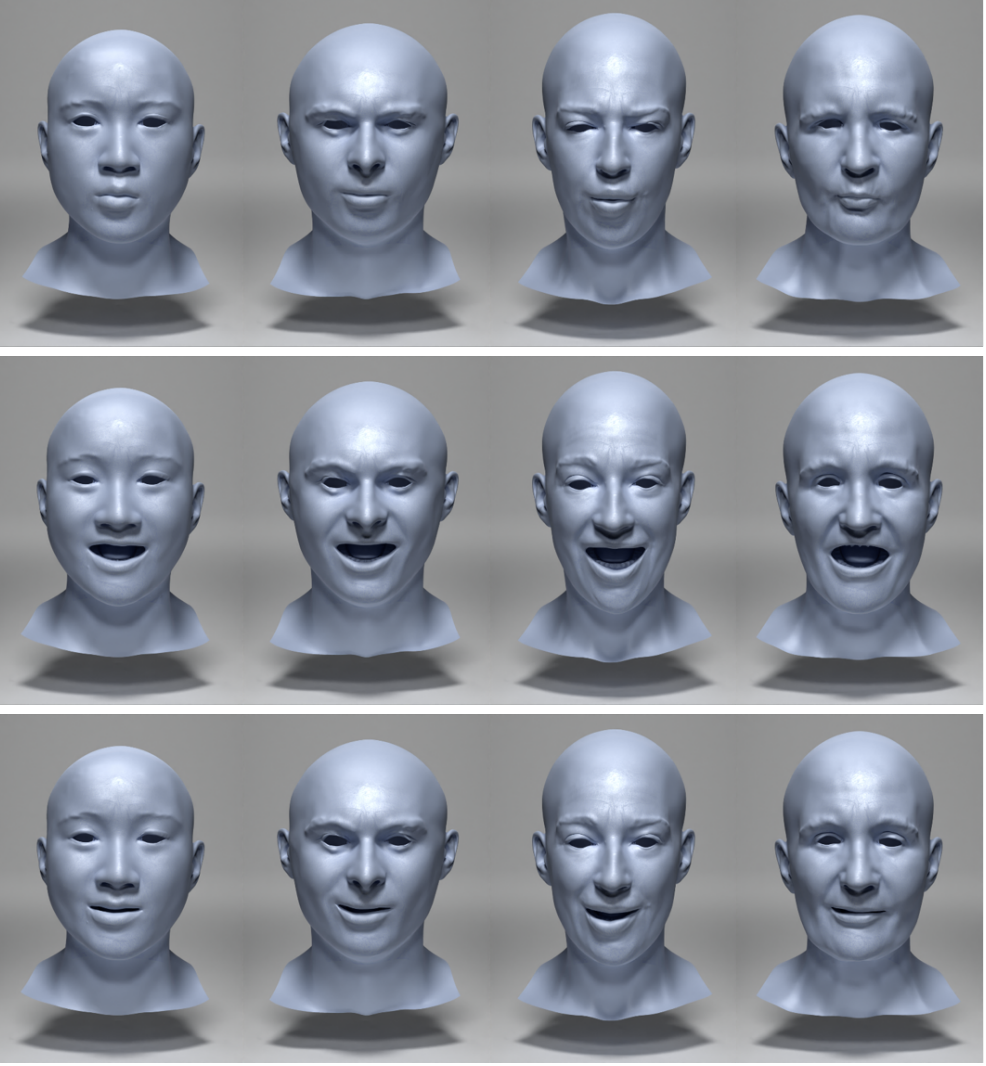

Generating realistic facial animation for CG characters and digital doubles is one of the hardest tasks in animation. A typical production workflow involves capturing the performance of a real actor using mo-cap technology, and transferring the captured motion to the target digital character. This process, known as retargeting, has been used for over a decade, and typically relies on either large blendshape rigs that are expensive to create, or direct deformation transfer algorithms that operate on individual geometric elements and are prone to artifacts. We present a new method for high-fidelity offline facial performance retargeting that is neither expensive nor artifact-prone. Our two step method first transfers local expression details to the target, and is followed by a global face surface prediction that uses anatomical constraints in order to stay in the feasible shape space of the target character. Our method also offers artists with familiar blendshape controls to perform fine adjustments to the retargeted animation. As such, our method is ideally suited for the complex task of human-to-human 3D facial performance retargeting, where the quality bar is extremely high in order to avoid the uncanny valley, while also being applicable for more common human-to-creature settings. We demonstrate the superior performance of our method over traditional deformation transfer algorithms, while achieving a quality comparable to current blendshape-based techniques used in production while requiring significantly fewer input shapes at setup time. A detailed user study corroborates the realistic and artifact free animations generated by our method in comparison to existing techniques.